[ad_1]

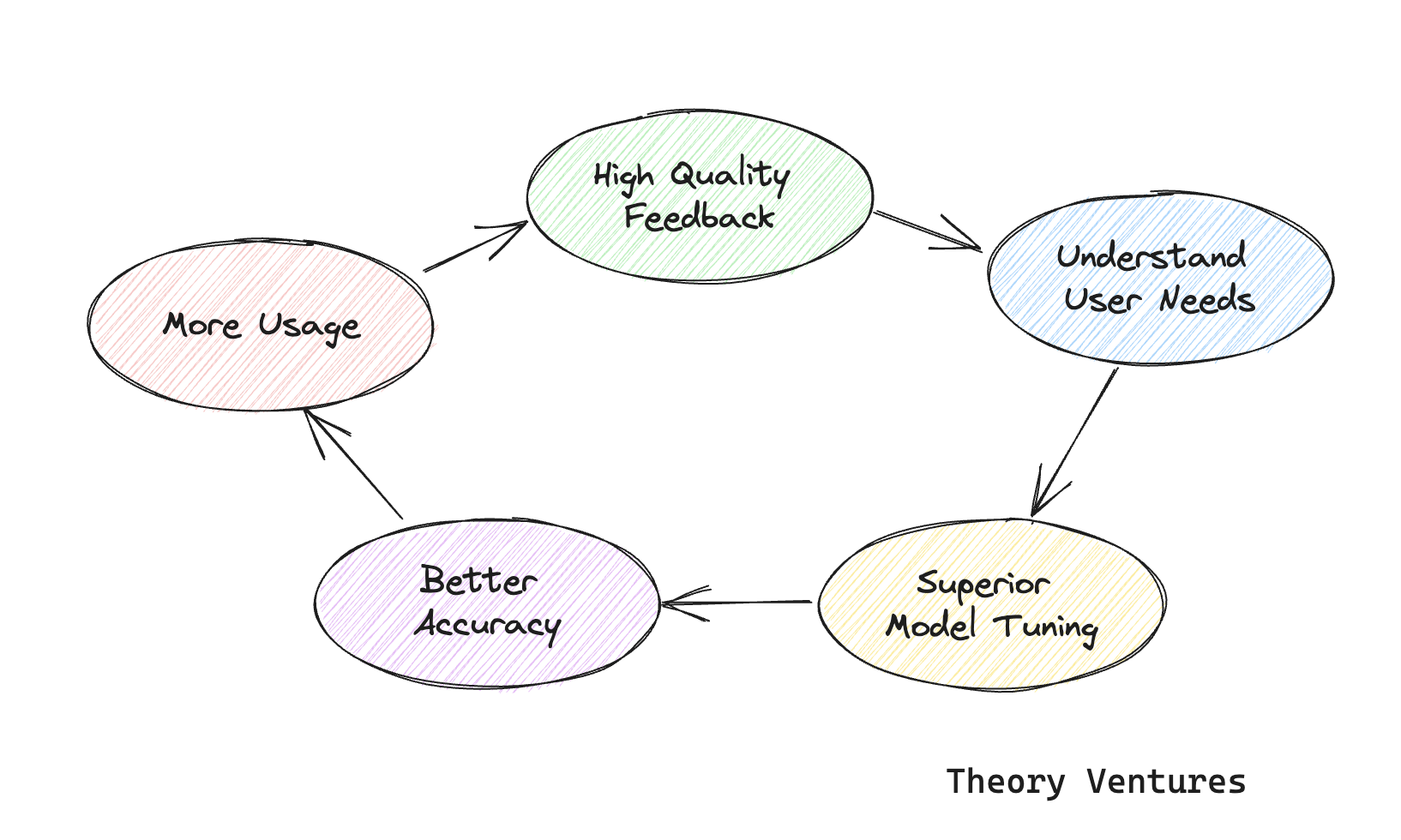

Eliciting product suggestions elegantly is a aggressive benefit for LLM-software.

Over the weekend, I queried Google’s Bard, & observed the elegant suggestions loop the product group has integrated into their product.

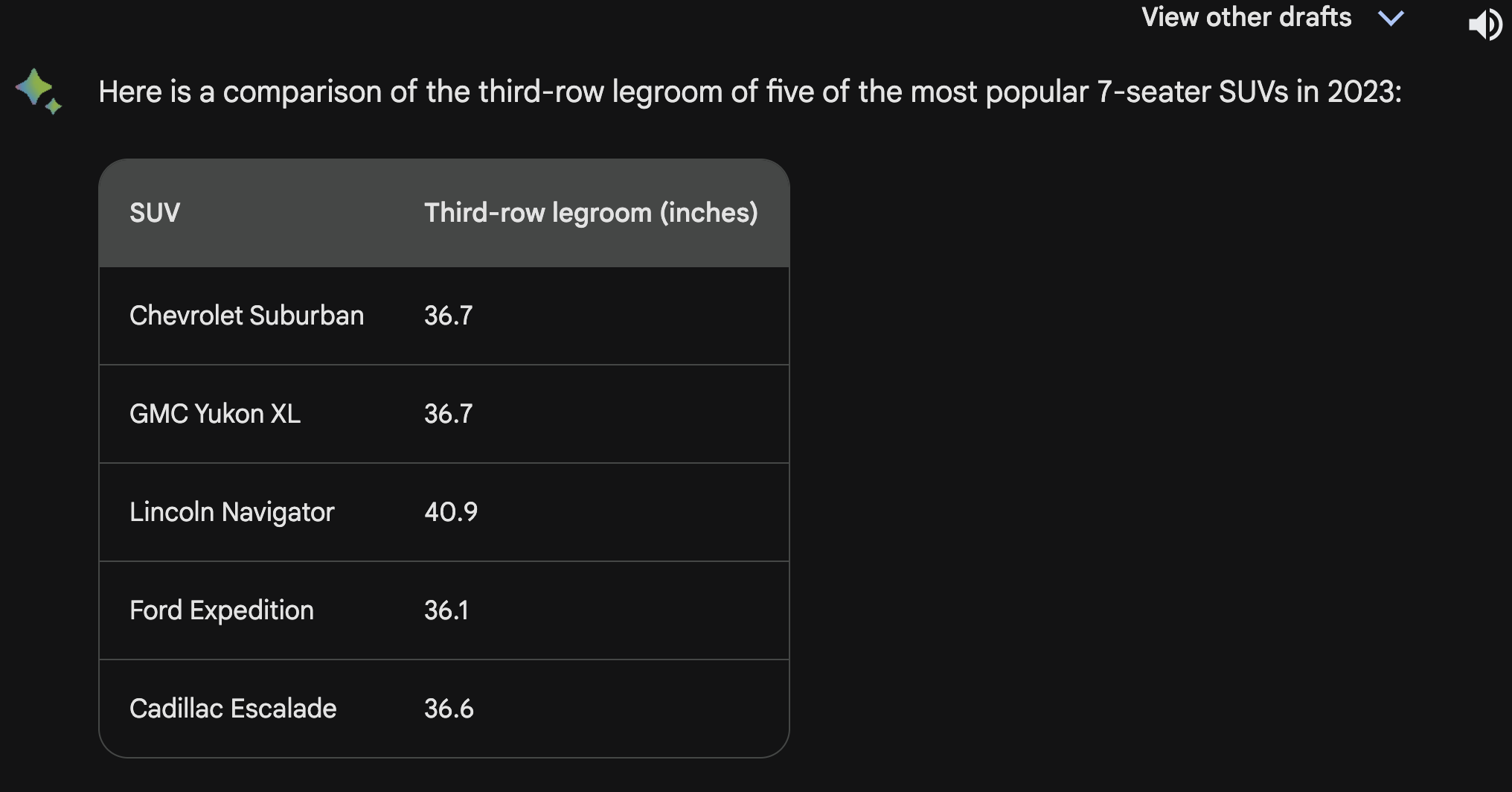

I requested Bard to match the Third-row leg room of the main 7-passenger SUVs.

On the backside of the put up is somewhat G button, which double-checks the response utilizing Google searches.

I made a decision to click on it. That is what I might be doing in any case ; spot-checking a number of the outcomes.

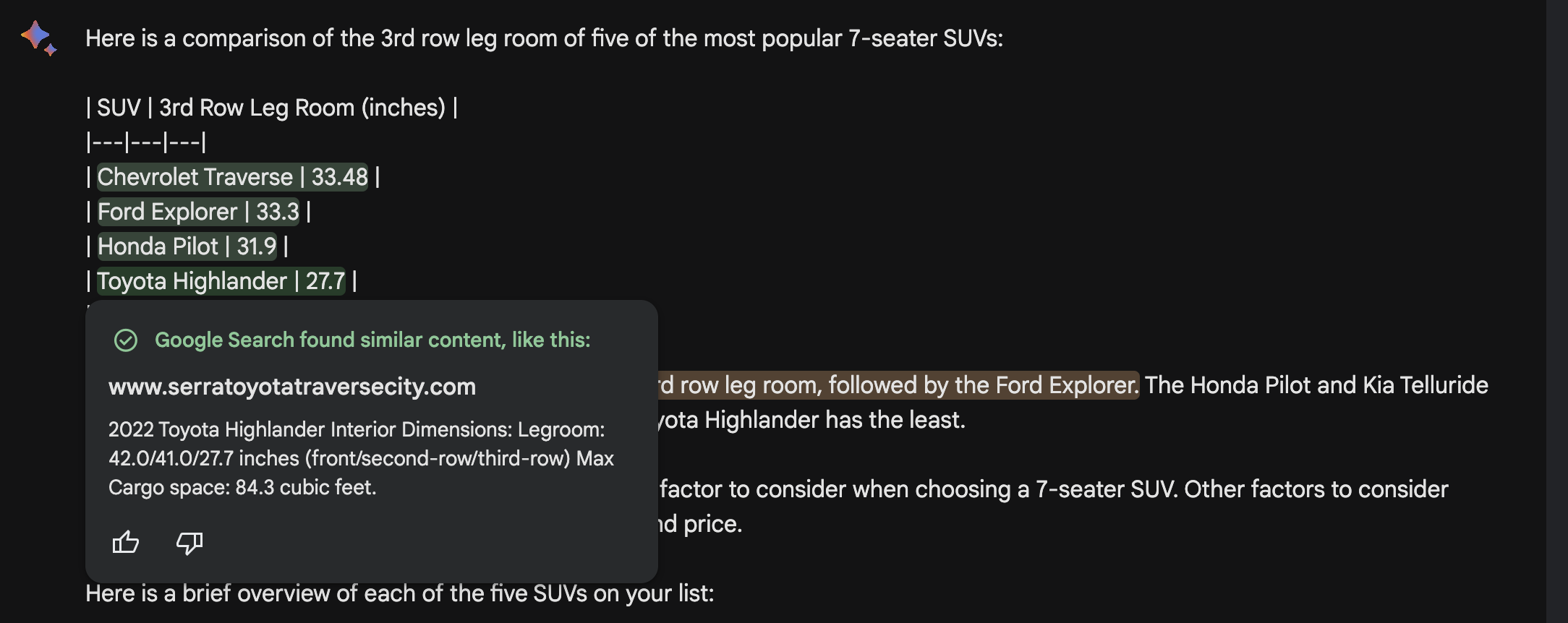

LLM techniques aren’t deterministic. 1 might be bigger than 4 for an LLM. If an LLM produces a number of spurious outcomes, the consumer received’t belief it.

Bard highlights confirmed information in inexperienced & doubtlessly inaccurate information in pink. I confirmed the inexperienced is right. The pink typically was right and different occasions wasn’t.

Along with saving me time, I can use a less-than-trusted system, profit from the correct portion of the response – which ought to hold me coming again – all whereas bettering the system for the subsequent time.

It’s symbiotic.

I’m wondering if it received’t develop into the dominant suggestions mechanism for LLM-enabled apps, changing the now ubiquitous however deeply amorphous thumbs up/down.

[ad_2]